I used to work on “non-convex” regularization with -penalties, that is, studying the Tikhonov functional

The regularization properties are quite nice as shown by Markus Grasmair in his two papers “Well-posedness and convergence rates for sparse regularization with sublinear penalty term” and “Non-convex sparse regularisation” and by Kristian Bredies and myself in “Regularization with non-convex separable constraints”.

The next important issue is to have some way to calculate global minimizers for (1). But, well, this task may be hard, if not hopeless. Of course one expects a whole lot of local minimizers.

It is quite instructive to consider the simple case in which is the identity first:

Example 1 Consider the minimization of

This problem separates over the coordinates and hence, can be solved by solving the one-dimensional minimization problem

- For

we get

.

- Replacing

by

leads to

instead of

.

Hence, we can reduce the problem to: For

find

One local minimizer is always

since the growth of the

-th power beats the term

. Then, for

large enough, there are two more extrema for~(4) which are given as the solutions to

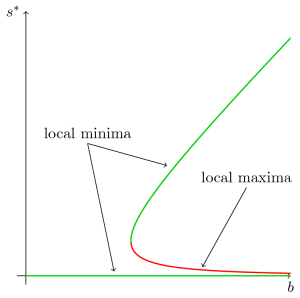

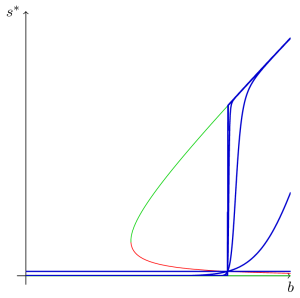

one of which is a local maximum (the one which is smaller in magnitude) and the other is a local minimum (the one which is larger in magnitude). This is illustrated in the following “bifurcation” picture:

Now the challenge is, to find out which local minimum has the smaller value. And here a strange thing happens: The “switching point” for

at which the global minimizer jumps from

to the upper branch of the (multivalued) inverse of

is not at the place at which the second local minimum occurs. It is a little bit larger: In “Convergence rates and source conditions for Tikhonov regularization with sparsity constraints” I calculated this “jumping point” the as the weird expression

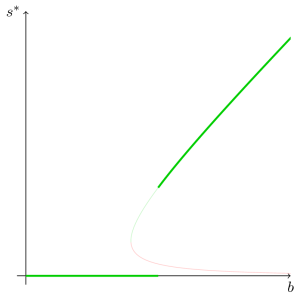

This leads to the following picture of the mapping

1. Iterative re-weighting

One approach to calculate minimizers in~(1) is the so called iterative re-weighting, which appeared at least in “An unconstrained minimization for sparse solution of under determined linear systems” by Ming-Jun Lai and Jingyue Wang but is probably older. I think for the problem with equality constraints

the approach at least dates back to the 80s but I forgot the reference… For the minimization of (1) the approach goes as follows: For choose a

and a small

and rewrite the

-quasi-norm as

The necessary condition for a minimizer of

is (formally take the derivative)

Note that the exponent is negative (which is also a reason for the introduction of the small

). Aiming at an iteration, we fix some of the

‘s and try to solve for others: If we have a current iterate

we try to find

by solving

for . This is the necessary condition for another minimization problem which involves a weighted

-norm: Define (non-negative) weights

an iterate

Lai and Wang do this for which has the benefit that each iteration can be done by solving a linear system. However, for general

each iteration is still a convex minimization problem. The paper “Convergence of Reweighted

Minimization Algorithms and Unique Solution of Truncated

Minimization” by Xiaojun Chen and Weijun Zhou uses

and both papers deliver some theoretical results of the iteration. Indeed in both cases one can show (subsequential) convergence to a critical point.

Of course the question arises if there is a chance that the limit will be a global minimizer. Unfortunately this is not probable as a simple numerical experiment shows:

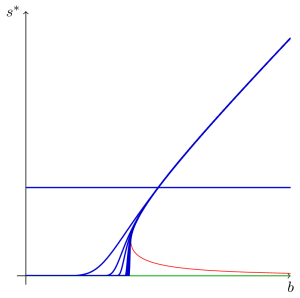

Example 2 We apply the iteration (5) to the one dimensional problem (3) in which we know the solution. We do this for many values of

and plot the value of

against the limit of the iteration. Good news first: Everything converges nicely to critical points as expected. Even better:

can be really small–machine precision works. The bad news: The limit depends on the initial value. Even worse: The method frequently ends on “the wrong branch”, i.e. in the local minimum which is not global. I made the following experiment: I took

, set

and chose

. First I initialized for all values of

with

. This produced the following output (I plotted every fifth iteration):

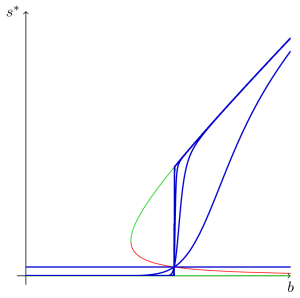

Well, the iteration always chose the upper branch… In a second experiment I initialized with a smaller value, namely withfor all

. This gave:

That’s interesting: I ended at the upper branch for all values below the point where the lower branch (the one with the local maximum) crosses the initialization line. This seems to be true in general. Starting with

gave

Well, probably this is not too interesting: Starting “below the local maximum” will bring you to the local minimum which is lower and vice versa. Indeed Lai and Wang proved in their Theorem 2.5 that for a specific setting (of completely full rank, sparsity high enough) there is an

small enough such that the method will pick the global minimizer. But wait—they do not say anything about initialization… What happens if we initialize with zero? I have to check…

By the way: A similar experiment as in this example with different values of showed the same behavior (getting the right branch if the initialization is ok). However: smaller

gave much faster convergence. But remember: For

(experimentally the fastest) each iteration is an

penalized problem while for

one has to solve a linear system. So there seems to be a tradeoff between “small number of iterations in IRLP” and “complexity of the subproblems”.

2. Iterative thresholding

Together with Kristian Bredies I developed another approach to these nasty non-convex minimization problems with -quasi-norms. We wrote a preprint back in 2009 which is currently under revision. Moreover, we always worked in a Hilbert space setting that is

maps the sequence space

into a separable Hilbert space.

Remark 1 When showing results for problems in separable Hilbert space I sometimes get the impression that others think this is somehow pointless since in the end one always works with

in practice. However, I think that working directly in a separable Hilbert space is preferable since then one can be sure that the results will not depend on the dimension

in any nasty way.

Basically our approach was, to use one of the most popular approaches to the -penalized problem: Iterative thresholding aka forward-backward splitting aka generalized gradient projection. I prefer the last motivation: Consider the minimization of a smooth function

over a convex set

by the projected gradient method. That is: do a gradient step and use the projection to project back onto

:

Now note that the projection is characterized by

Now we replace the “convex constraint” by a penalty function

, i.e. we want to solve

Then, we just replace the minimization problem for the projection with

and iterate

The crucial thing is, that one needs global minimizers to obtain . However, for these

penalties with

these are available as we have seem in Example~1. Hence, the algorithm can be applied in the case

One easily proves that one gets descent of the objective functional:

Lemma 1 Let

be weakly lower semicontinuous and differentiable with Lipschitz continuous gradient

with Lipschitz constant

and let

be weakly lower semicontinuous and coercive. Furthermore let

denote any solution of

Then for

it holds that

Proof: Start with the minimizing property

and conclude (by rearranging, expanding the norm-square, canceling terms and adding to both sides) that

Finally, use Lipschitz-continuity of to conclude

This gives descent of the functional value as long as . Now starts the hard part of the investigation: Under what circumstances do we get convergence and what are possible limits?

To make a long story short: For -penalties (and also other non-convex penalties which leave the origin with infinite slope) and fixed step-size

one gets that every subsequence of the iterates has a strong accumulation point which is a fixed point of the iteration for that particular

as long as

. Well that’s good, but here’s the bad news: As long as

we do not obtain the global minimizer. That’s for sure: Consider

and any

…

However, with considerably more effort one can show the following: For the iteration with

(and another technical condition on the Lipschitz constant of

) the iterates have a strong accumulation point which is a solution

and hence, satisfies necessary conditions for a global minimizer.

That’s not too bad yet. Currently Kristian and I, together with Stefan Reiterer, work to show that the whole sequence of iterates converges. Funny enough: This seems to be true for and

with rational

in

… Basically, Stefan was able to show this with the help of Gröbner bases and this has been my first contact with this nice theory. We hope to finalize our revision soon.

August 25, 2012 at 9:42 pm

[…] correctly, she also treated “iteratively reweighted least squares” as I described in my previous post. Unfortunately, she did not include the generalized forward-backward methods based on -functions […]