In my previous post I illustrated why it is not possible to compute the Jordan canonical form numerically (i.e. in floating point numbers). The simple reason: For every matrix and every

there is a matrix

which differs from

by at most

(e.g. in every entry – but all norms for matrices are equivalent, so this does not really play a role) such that

is diagonalizable. So why should you bother about computing the Jordan canonical form anyway? Or even learning or teaching it? Well, the prime application of the Jordan canonical form is to calculate solutions of linear systems of ODEs. The equation

with matrix and initial value

(both could also be complex). This system has a unique solution which can be given explicitly with the help of the matrix exponential as

where the matrix exponential is

It is not always simple to work out the matrix exponential by hand. The straightforward way would be to calculate all the powers of , weight them by

and sum the series. This may be a challenge, even for simple matrices. My favorite example is the matrix

Its first powers are

You may notice that the Fibonicci numbers appear (and this is pretty clear on a second thought). So, finding a explicit form for leads us to finding an explicit form for the

-th Fibonacci number (which is possible, but I will not treat this here).

Another way is diagonalization: If is diagonalizable, i.e. there is an invertible matrix

and a diagonal matrix

such that

you see that

and the matrix exponential of a diagonal matrix is simply the exponential function applied to the diagonal entries.

But not all matrices are diagonalizable! The solution that is usually presented in the classroom is to use the Jordan canonical form instead and to compute the matrix exponential of Jordan blocks (using that you can split a Jordan block into the sum of a diagonal matrix

and a nil-potent matrix

and since

and

commute one can calculate

and both matrix exponentials are quite easy to compute).

But in light of the fact that there are a diagonalizable matrices arbitrarily close to any matrix, on may ask: What about replacing a non-diagonalizable matrix with a diagonalizable one (with a small error) and then use this one?

Let’s try this on a simple example:

We consider

which is not diagonalizable. The linear initial value problem

has the solution

and the matrix exponential is

So we get the solution

Let us take a close-by matrix which is diagonalizable. For some small we choose

Since is upper triangular, it has its eigenvalues on the diagonal. Since

, there are two distinct eigenvalues and hence,

is diagonalizable. Indeed, with

we get

The matrix exponential of is

Hence, the solution of ,

is

How is this related to the solution of ? How far is it away?

Of course, the lower right entry of converges to

for

, but what about the upper right entry? Note that the entry

is nothing else that the (negative) difference quotient for the derivative of the function at

. Hence

and we get

as expected.

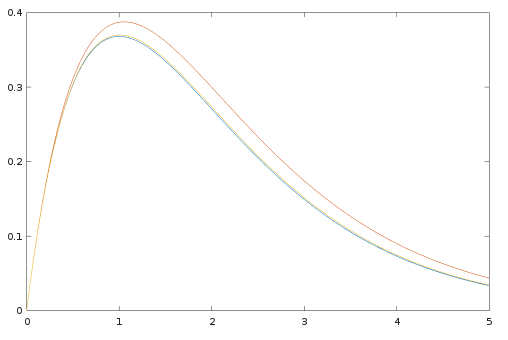

It turns out that a fairly big is already enough to get a quite good approximation and even the correct asymptotics: The blue curve it first component of the exact solution (initialized with the second standard basis vector), the red one corresponds

and the yellow on (pretty close to the blue on) is for

.

to $\e

Leave a comment